.png)

The Databricks Data + AI World Tour Paris, held on December 2 at Paris La Défense Arena, was an exceptional convergence of data-driven companies, technology experts, and business innovators.

This crucial stop on the global tour showcased the transformative power of the Databricks Data Intelligence Platform, with a sharp focus on AI-driven insights, scalable data management, and compelling, industry-specific use cases.

The event highlighted the accelerating importance of data-driven transformation across France and the broader European region. Attendees were given an unparalleled opportunity to explore cutting-edge advancements in the unified Lakehouse architecture and Generative AI industrialization, while engaging with the most relevant profiles in the field.This report analyzes the strategic stakes and key features presented at the Databricks Data + AI World Tour Paris, detailing the vital role of strategic alliances in helping businesses leverage the platform to turn data into measurable business impact.

The event confirmed two fundamental transitions for businesses: the industrialized adoption of AI, both underpinned by sobering market realities and the end of data silos.

The analytical thread for Generative AI marked a passage from simple experimentation to governance and industrialization at the enterprise scale.The strategic challenge was to ensure that AI applications generated reliable and secure results.

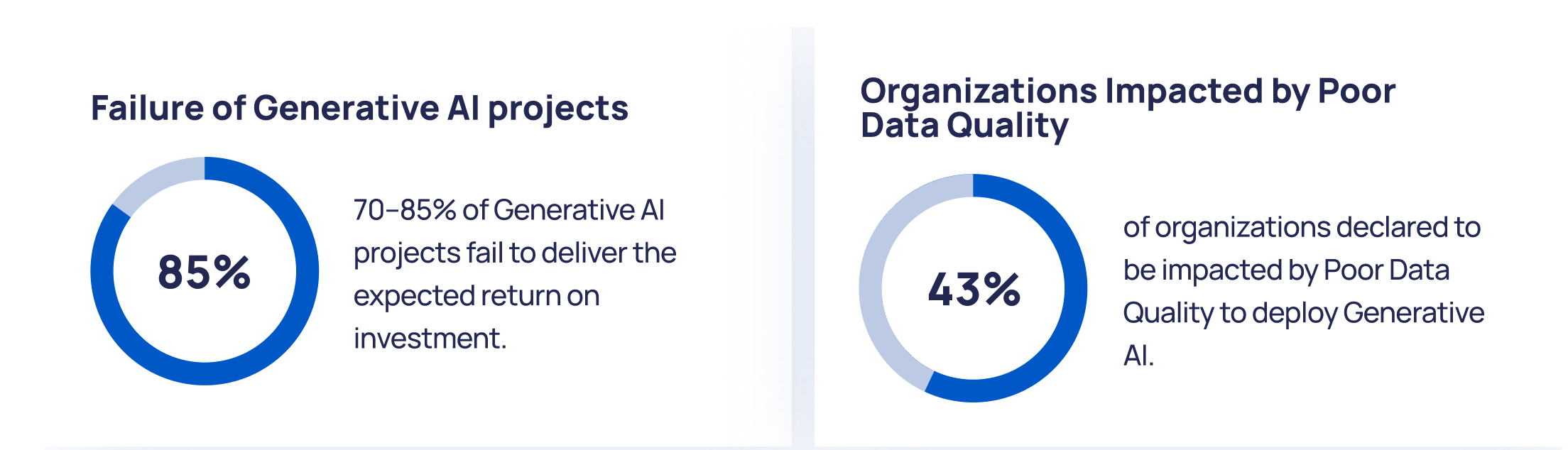

This need has been reinforced by market realities:

This failure rate is often due to poor data quality, fragmented datasets and the absence of governance.

In other words, without reliable, unified and traceable data, GenAI cannot move beyond isolated proofs of concept.

Generative AI begins to create measurable value when it is connected to internal proprietary data through RAG, secured and governed, traceable (lineage), monitored (observability), and embedded directly in business workflows.

Industrializing GenAI means making it reliable, auditable and operational, so that it can support business decision-making rather than remain a standalone prototype.

When Generative AI is properly industrialized, governed, connected to internal data, and integrated into business workflows, it stops being an experiment and starts driving performance. The real shift doesn’t come from the model itself, but from the foundations around it: data reliability, lineage, access control and observability. With these in place, AI delivers repeatable value across the organization instead of isolated use cases.

.png)

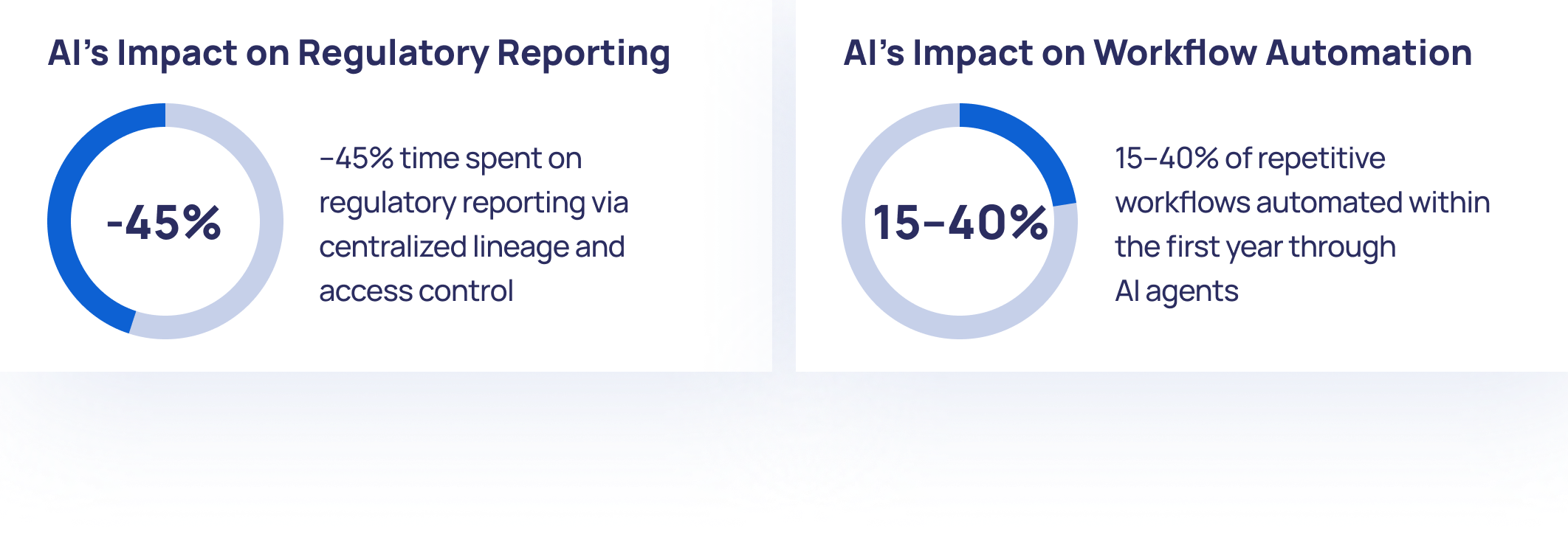

The core message of the day was the mandatory convergence of architectures to streamline information access and fuel AI. The event insisted that AI requires immediate access to all data, whether structured (Data Warehouse) or unstructured (Data Lake).

AI must access structured and unstructured data in real time, across all business domains. Organizations maintaining siloed landscapes are losing innovation speed and productivity.

The Databricks Lakehouse and Unity Catalog emerged as the reference architecture to unify data, analytics and AI models with security, lineage and governance by default.

The Databricks architecture delivers unified governance not only conceptually but operationally. The Lakehouse consolidates all enterprise data (structured and unstructured, real-time and historical) into one foundation for analytics and AI.

This is what enables AI to move from experimentation to reliable industrialization.With Lakehouse + Unity Catalog, AI models operate on complete, continuously updated and fully traceable data, reducing hallucinations, preventing model drift and closing compliance gaps

.png)

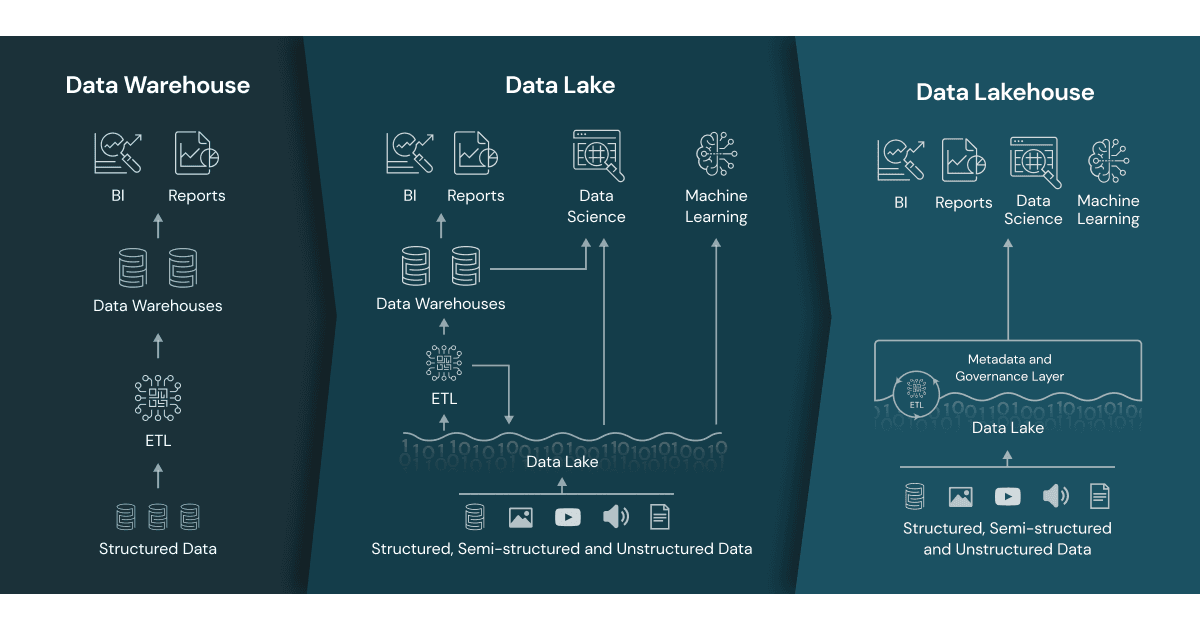

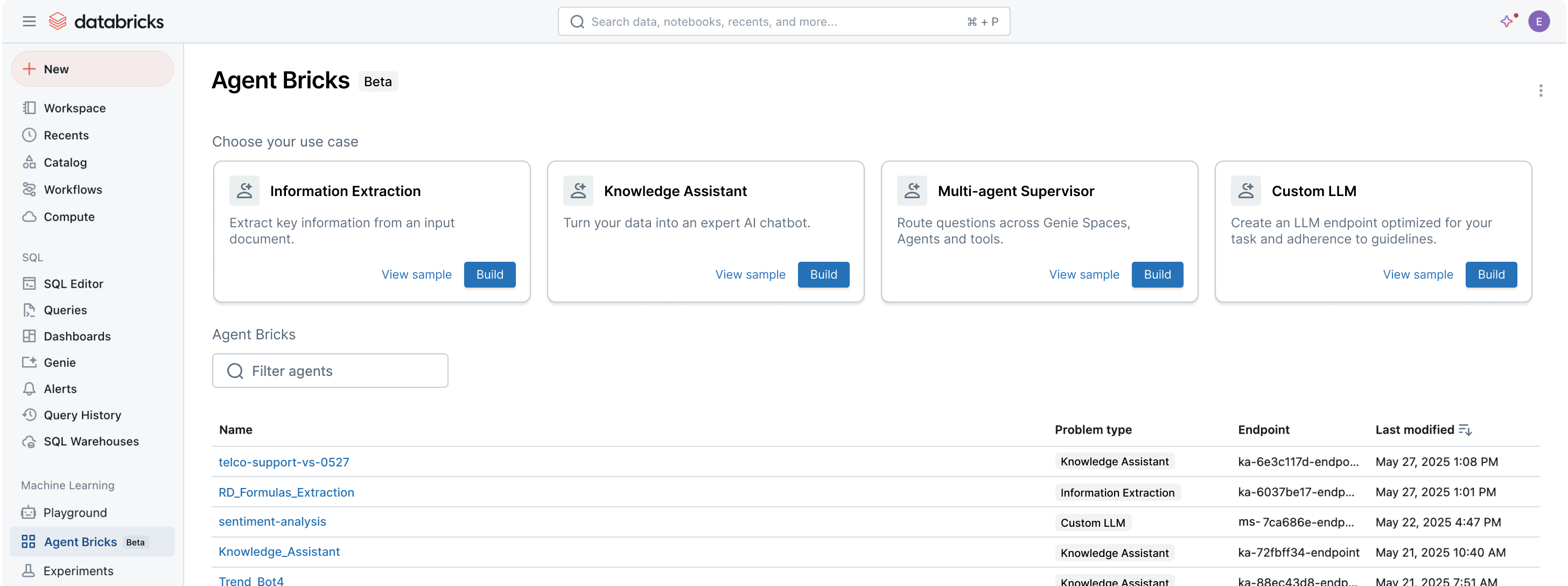

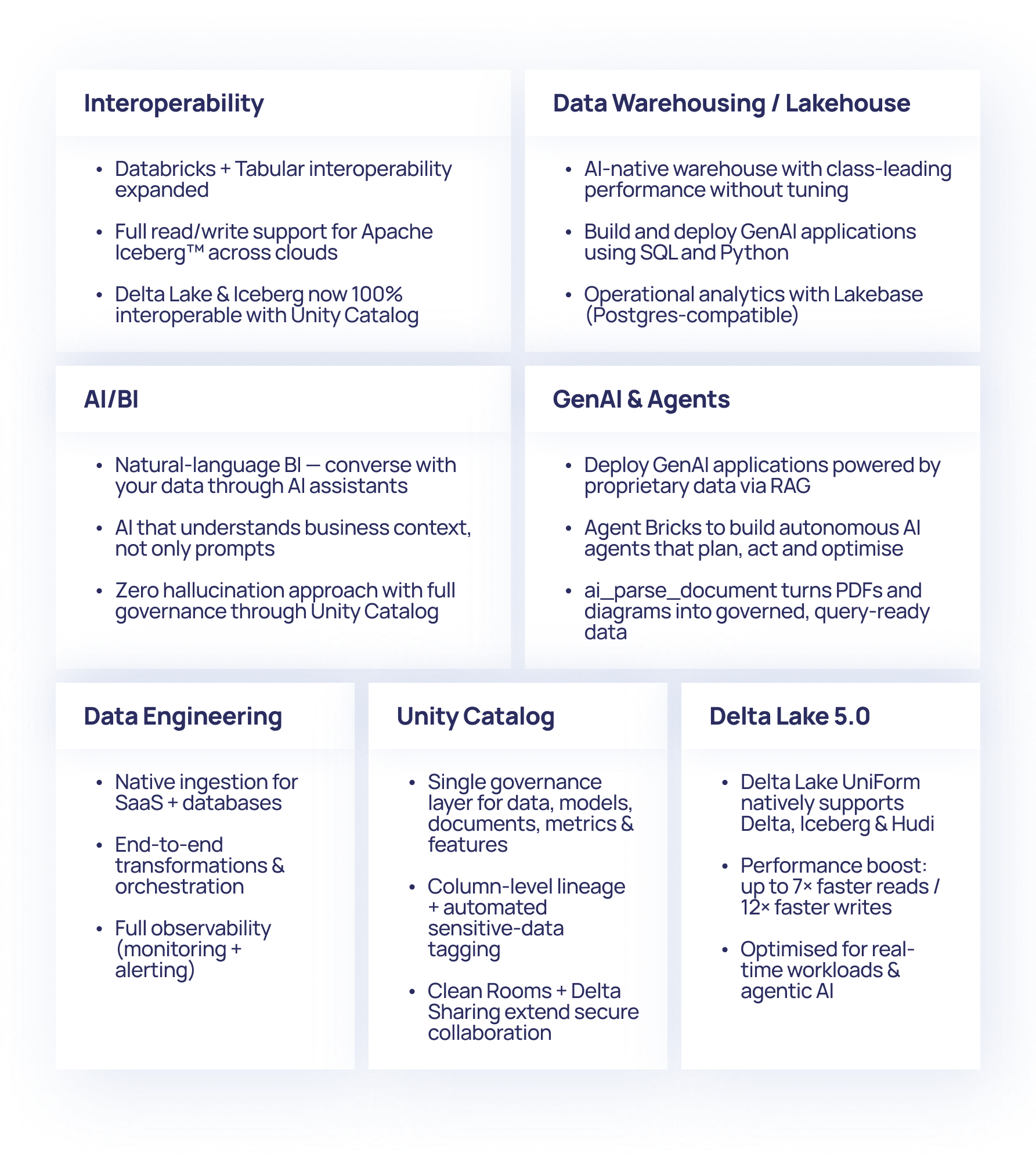

The Databricks platform showcased new capabilities that addressed market challenges by focusing on simplifying and democratizing Data Intelligence.

Unity Catalog now sits at the core of the platform as the governance layer that secures and standardizes all data and AI assets. It unifies access policies, lineage and compliance for structured and unstructured data, ML models, metrics and features through a single control plane.

.png)

The addition of full Apache Iceberg support reinforces its open-architecture approach, removing format lock-in and enabling cross-cloud collaboration.

.png)

New governance features such as automated sensitive-data tagging, column-level lineage and standardized business metrics make data not only accessible but reliable, explainable and auditable at scale: the foundation required for enterprise AI. Combined with new document-intelligence capabilities (via ai_parse_document), Unity Catalog now brings governance even to PDFs, diagrams and mixed-format documents, ensuring that unstructured content can be safely integrated into AI workflows without parallel extraction or custom tooling.

Agentbricks positions Databricks at the forefront of the shift toward agentic AI, enabling systems that can act, reason and collaborate autonomously within business workflows.The framework provides the building blocks for agents that can:

Agent Bricks introduces a framework for building autonomous AI agents capable of planning, acting and optimizing within business processes. To accelerate adoption, Databricks has also launched dedicated AI agent training and deployment frameworks, equipping teams with the skills and standard patterns required to move from experimentation to production-grade, governed agentic systems.

SAP data historically locked behind complex extraction processes becomes fully operational in real time.

.png)

Combined, they allow enterprises to:

This represents a significant step forward for any organization where SAP is the backbone of operational data.

When analyzing Data & AI transformations across industries, one conclusion consistently emerges: the hardest part is not the algorithm, the architecture, or the platform, the hardest part is the organization. Most companies assume that scaling Data & AI is primarily a matter of:

There are four sources of uncertainty that determine the success or failure of a program:

Organizations often believe they need more tools, more features, more use cases to accelerate. In reality, the most successful transformations are driven by something else: They remove uncertainty faster than they add capabilities.

When people trust the data, trust the process, and know who is accountable for what, the pace of execution changes instantly. Models improve faster. Governance becomes a competitive advantage. GenAI deployments become predictable instead of experimental.

When this foundation is in place, organizations stop trying to accelerate through tools and instead accelerate through clarity. Teams no longer spend time questioning the data, redefining processes, or debating responsibilities, they execute. The focus shifts from fixing to delivering. From troubleshooting to scaling. From isolated wins to repeatable outcomes. The companies that grow the fastest aren’t the ones that deploy the most technologies; they are the ones that remove uncertainty systematically, so every new use case becomes easier than the previous one. This is what unlocks true scale.

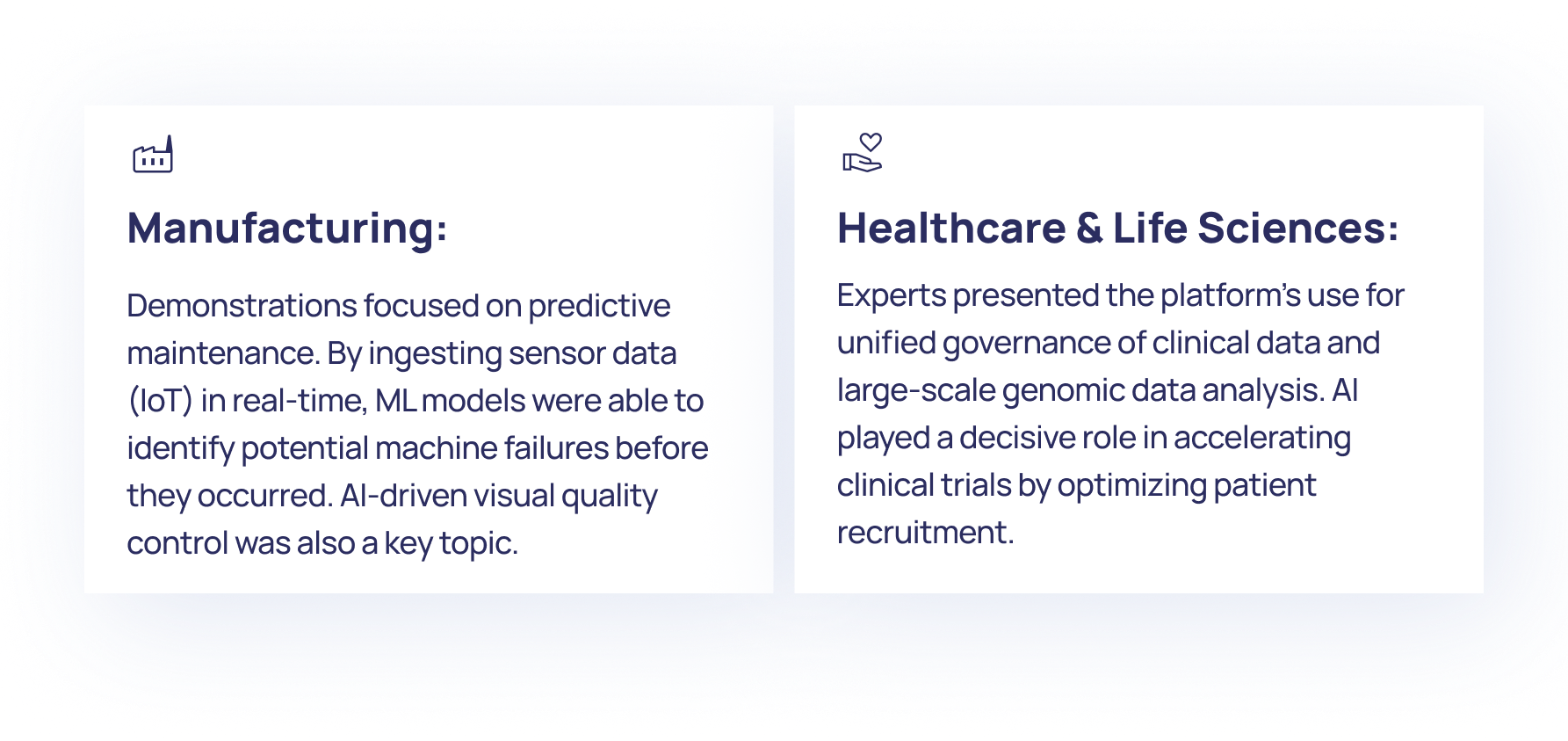

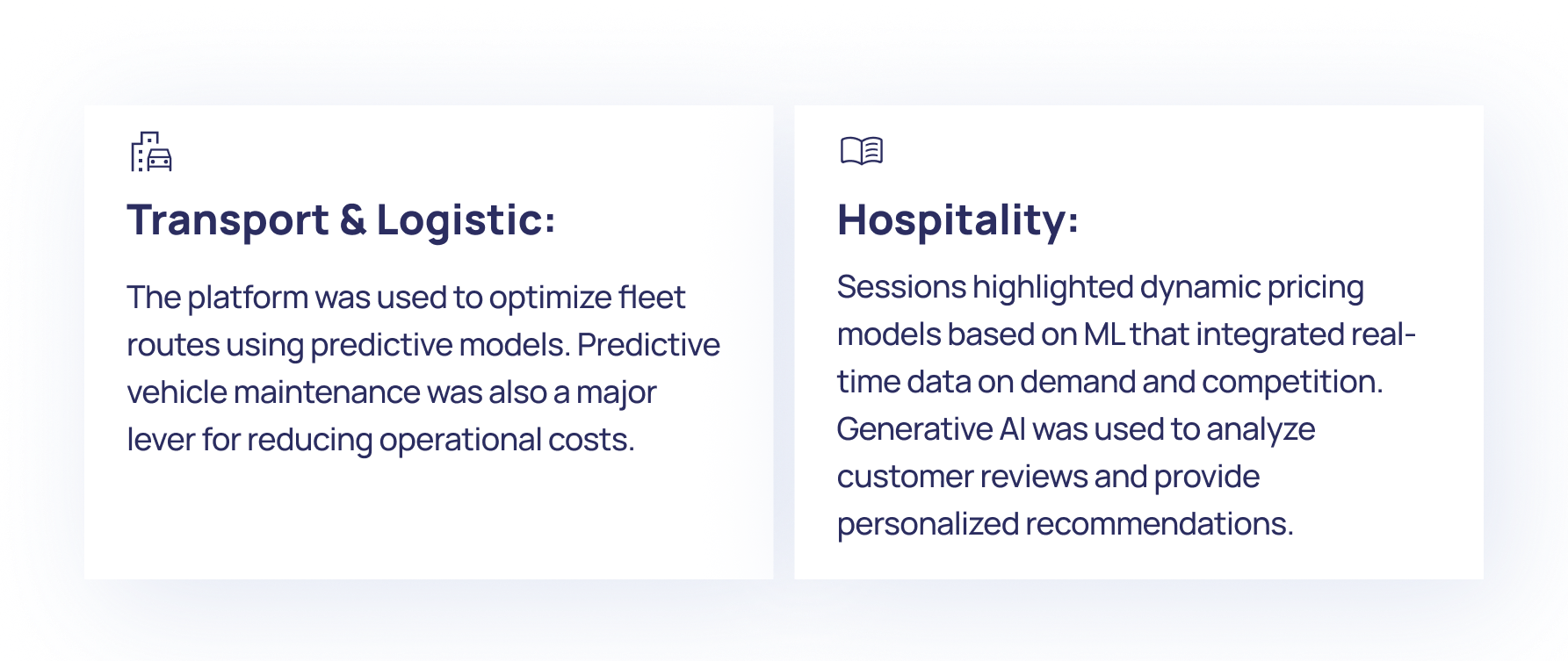

The event demonstrated that the adoption of the Data Intelligence Platform aimed to transform business functions across various industries.

The conclusions from the Data + AI World Tour Paris necessitate an immediate action plan to translate analysis into competitive advantage.

The Databricks Data + AI World Tour Paris made one point unmistakable: market leadership will belong to enterprises that achieve data agility at scale. Not by multiplying tools, but by converting data into governed insight and automated action with speed and consistency.

The Lakehouse emerged as the only sustainable architecture capable of unifying governance, streaming, analytics and AI into a single value engine. Unity Catalog confirmed that governance is now the prerequisite for industrializing Generative AI, turning it from experimentation into secure and auditable business automation across mission-critical processes.

The real differentiator is not AI adoption. It is the ability to operationalize AI in a repeatable and reliable way. Organizations that build this execution model see every new use case become easier, faster and more impactful than the previous one.The next decade will not reward companies that invest the most in AI. It will reward those that turn data into governed business outcomes the fastest.